WWDC 2018 for iOS developers:

Siri Shortcuts

In 2016 Apple released SiriKit as a way for your apps to work with Siri. I was really excited because it created an entirely new way for the user to interact with their phone. They weren’t limited to touch (gestures, force, keyboard) anymore. Instead, they could finally use their voice with any app supporting it. It was then, while I was indulged in this idea, that I realized, this wasn’t the case. SiriKit usage was limited to 6 categories, namely:

- Messaging

- VoIP calling

- Payment

- Ride booking

- Photo search

- Workouts

This really sucked. I was bummed and didn’t look deeper into it, as there was no project on my list, which could benefit from it.

Fast-forward to 2018. Monday in its Keynote Apple finally announced a new way to interact with Siri. It’s called Siri Shortcuts. Let’s dive into how iOS developers can use them.

Shortcuts

So what are Siri Shortcuts? They are actions Siri can predict and provide to the user in places such as Spotlight search, Lock Screen etc. Your app can provide these actions to Siri, while the user interacts with it. e.g. when a user orders a coffee at your store, your app can provide an action with order information to Siri. Over time, Siri will learn whether the user does this multiple times and will start suggesting the action your app provided. This way a coffee can be ordered from the Lock Screen. Isn’t that nice?

That’s not all! Users can even use these shortcuts to add personalized voice phrases. So next time you tell Siri “Gibberish”, Siri will order your coffee.

But how do we do this?

The Fast Way

If you are already using NSUserActivity you can make them eligible for predictions by Siri with one line of code:

userActivity.isEligibleForPrediction = true

But shortcuts are can be way more powerful. So let's see how custom shortcuts work.

App Structure

As before to use SiriKit we need an Intent App Extension to our app. This extension will handle the shortcuts which will run in the background. Furthermore, Apple suggests splitting code which is shared between the extension and the app to a framework. This framework should contain all the code managing and donating shortcuts.

Creating Shortcuts

Look at your app and check which actions occur most often. Let’s look at the fitness app, which can play music. Whenever you start your workout, you want to listen to music. So the app can donate a corresponding shortcut to Siri. Now whenever Siri detects, that the user starts his workout routine, it can suggest playing music via your app. Of course, Siri has to learn it first, so a few workouts have to happen before Siri will make these suggestions. I do ballroom dancing. As I always start practicing Slow Waltz for 15 minutes at the beginning for warmup, my app could create a shortcut, suggesting to do precisely this and donate it.

Before we look into how we donate a shortcut, let’s see how to create one.

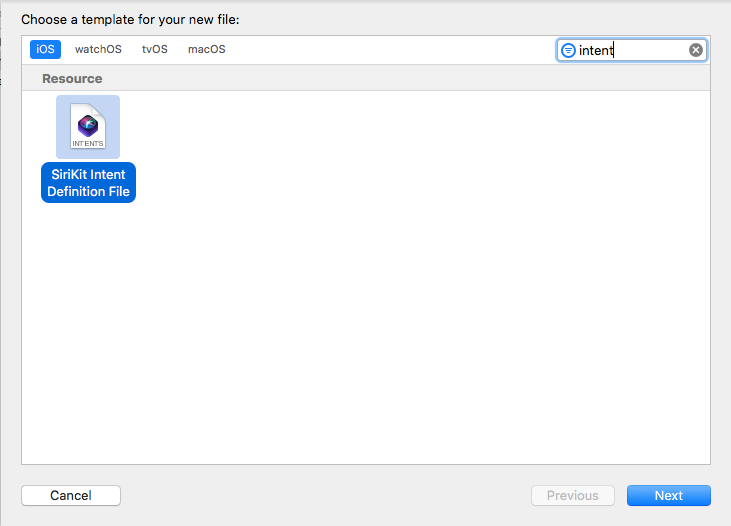

First, we need a new type of intent. Intents are the objects used to communicate with Siri. In our case the system doesn’t provide such an intent, so the app has to provide a custom intent. This can be done by adding an Intent Definition file to your project.

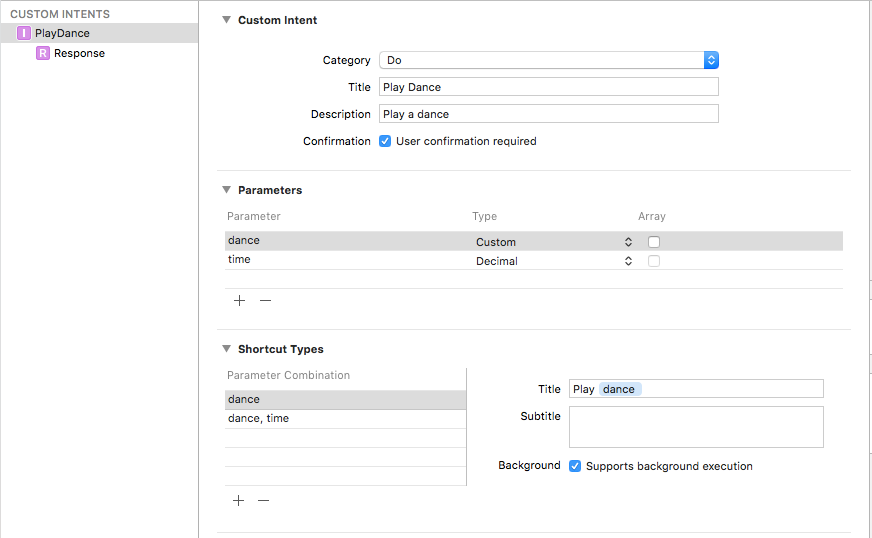

Within it, you can define your custom intent. Let’s call it ‘DancePracticeIntent’.

The intent has 2 parameters:

- The dance to practice

- and the duration, after which it should automatically stop playing.

Furthermore, we can use these parameters to define different shortcuts. In my case it would be:

- dance (without a duration means unlimited)

- dance, duration.

Siri uses these types to identify requests the user makes, “Play Tango for 20 minutes” or “Play Slow Foxtrot”.

We could mark each shortcut with Supports background execution, so the app wouldn’t be opened when executing the shortcut and the user would stay on his Lock Screen.

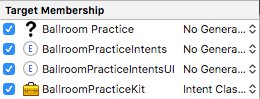

Having defined our custom intent in the Intent Definition file, Xcode can generate the corresponding source code for us. However, since we share the code, we have to specify which target has the generated code, otherwise, it will be generated for all targets. This can be done in the target settings. Set it for the framework to <Intent Classes> and for all other targets to <No Generated Classes>.

Donating Shortcuts

With all this done, we can finally donate our shortcuts. Whenever a user starts a corresponding interaction with our app, we simply create a corresponding intent. This will be used to create an INInteraction, which finally can donate our shortcut. Due to this phrasing actions can also be called donations.

As a side note: Apple doesn’t want you to donate intents handled by your Intent App Extension. This is due to Siri already knowing about those intents (otherwise they wouldn’t end up in the extension) and will consider them when predicating suggestions.

Handling Intents

Handling your Intents didn’t change. You still check which type of intent you got passed into your intent handler and then react accordingly:

Sometimes you would want to launch your app to react to an intent. To do so implement application(_:continue:restorationHandler:) in your AppDelegate. This provides you with an easy way to handle the intent.

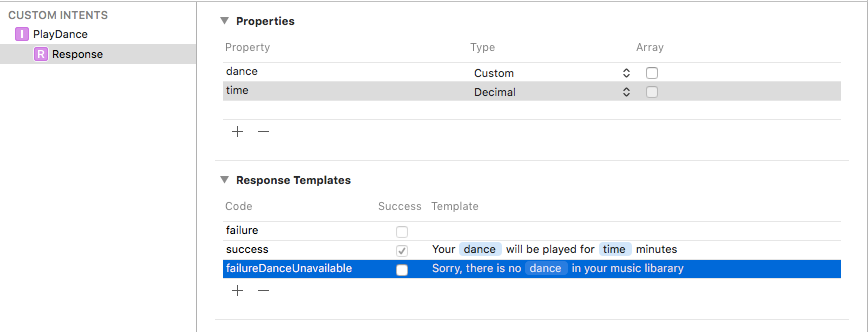

Custom Responses

We can also respond to intents. Imagine there is no song categorized as a Slow Waltz in your music collection. The intent play Slow Waltz would result in an error and we would like to inform the user about it.

An intent response can be also created within your Intent Definition file.

This will also contain predefined texts with placeholders. To pass a response to the user, use the completion handler of the IntentHandlers confirm() or handle() method.

Relevant Shortcuts

As mentioned earlier it would be nice for the app to suggest playing music as soon as I start practicing. For this, there are relevant shortcuts. You can add your INIntent to an INRelevantShortcut and add a RelevanceProvider. In our case, it would be a LocationProvider, which basically tells Siri to show this relevant shortcut when reaching a specific location.

Adding Phrases to Siri

Last but not least, developers can offer a user to add custom phrases. These can be used to interact with your app. My example above was “Gibberish”. Which phrases to use, is up to the user, but we can make suggestions. Since it’s up to the user, the problem of collisions is not our problem ;)

To record a phrase, we have to create an INUIEditVoiceShortcutViewController and set ourselves as a delegate. This way we can handle new or changed VoiceShortcuts.

Conclusion

This was a short overview of this exciting new API. Users finally can interact with every app in even more ways. I’m curious in what ways developers will use it and I would really love to hear from you, what you plan to do with Siri Shortcuts.

To be comprehensive, Apple increased the above list of supported intent domains to 12:

- Messaging

- List and Notes

- Workouts

- Payments

- VoIP Calling

- Media

- Visual Codes (Convey contact and payment information using Quick Response (QR) codes.)

- Photos

- Ride Booking

- Car Commands (Manage vehicle door locks etc.)

- CarPlay (Interact with vehicle’s CarPlay system)

- Restaurant Reservations

Still, shortcuts will top it all.

Further Resources

- developer.apple.com/documentati…

- developer.apple.com/documentati…

- developer.apple.com/documentati…

- developer.apple.com/videos/play…