版权声明:本套技术专栏是作者(秦凯新)平时工作的总结和升华,通过从真实商业环境抽取案例进行总结和分享,并给出商业应用的调优建议和集群环境容量规划等内容,请持续关注本套博客。QQ邮箱地址:1120746959@qq.com,如有任何技术交流,可随时联系。

1 线性回归问题实践(车的能耗预测)

-

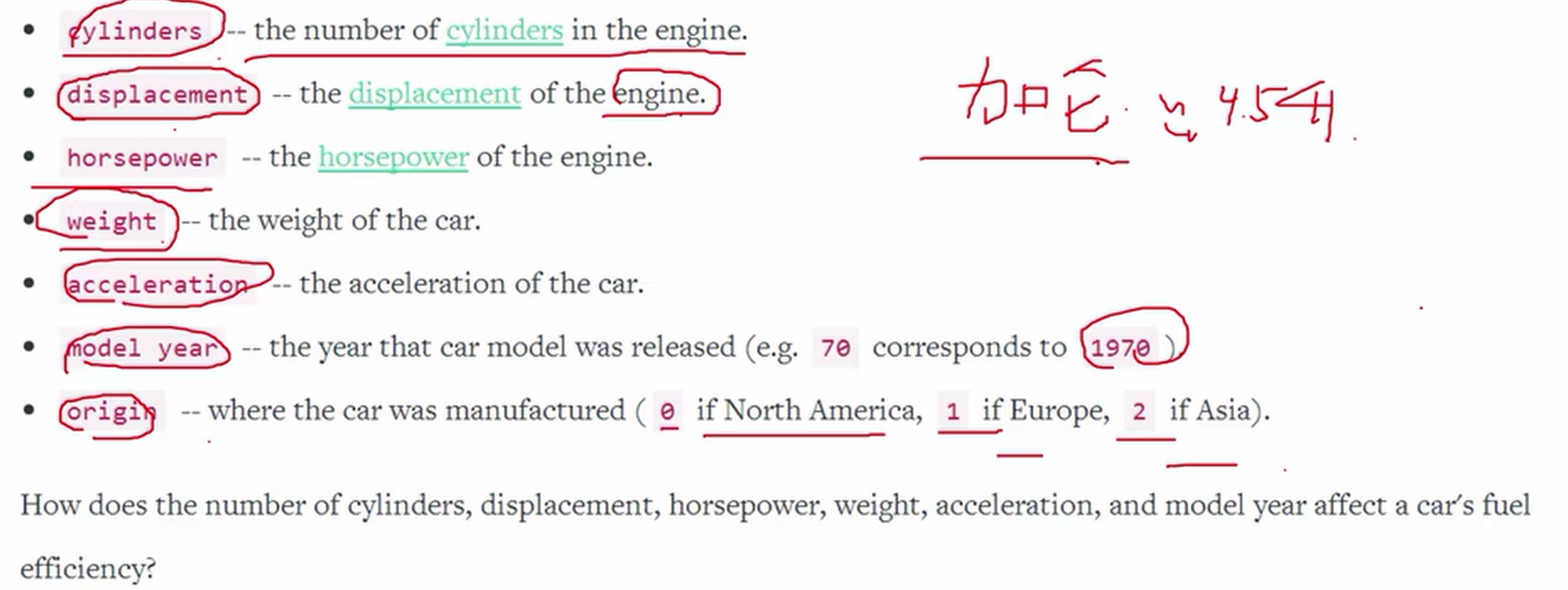

数据集欣赏

import pandas as pd import matplotlib.pyplot as plt columns = ["mpg", "cylinders", "displacement", "horsepower", "weight", "acceleration", "model year", "origin", "car name"] cars = pd.read_table("C:\\ML\\MLData\\auto-mpg.data", delim_whitespace=True, names=columns) print(cars.head(5)) mpg cylinders displacement horsepower weight acceleration model year \ 0 18.0 8 307.0 130.0 3504.0 12.0 70 1 15.0 8 350.0 165.0 3693.0 11.5 70 2 18.0 8 318.0 150.0 3436.0 11.0 70 3 16.0 8 304.0 150.0 3433.0 12.0 70 4 17.0 8 302.0 140.0 3449.0 10.5 70 origin car name 0 1 chevrolet chevelle malibu 1 1 buick skylark 320 2 1 plymouth satellite 3 1 amc rebel sst 4 1 ford torino -

查看数据集分布( ax=ax1指定所在区域)

fig = plt.figure() ax1 = fig.add_subplot(2,1,1) ax2 = fig.add_subplot(2,1,2) cars.plot("weight", "mpg", kind='scatter', ax=ax1) cars.plot("acceleration", "mpg", kind='scatter', ax=ax2) plt.show()

-

建立线性回归模型

import sklearn from sklearn.linear_model import LinearRegression lr = LinearRegression() lr.fit(cars[["weight"]], cars["mpg"]) -

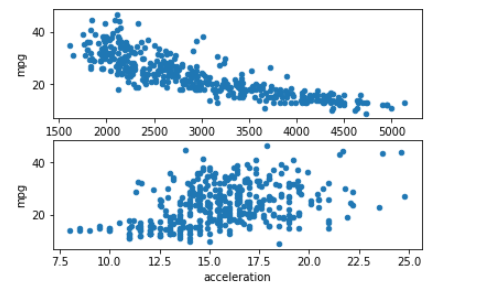

fit进行线性训练,predict进行预测并展示

import sklearn from sklearn.linear_model import LinearRegression lr = LinearRegression(fit_intercept=True) lr.fit(cars[["weight"]], cars["mpg"]) predictions = lr.predict(cars[["weight"]]) print(predictions[0:5]) print(cars["mpg"][0:5]) [19.41852276 17.96764345 19.94053224 19.96356207 19.84073631] 0 18.0 1 15.0 2 18.0 3 16.0 4 17.0 Name: mpg, dtype: float64 -

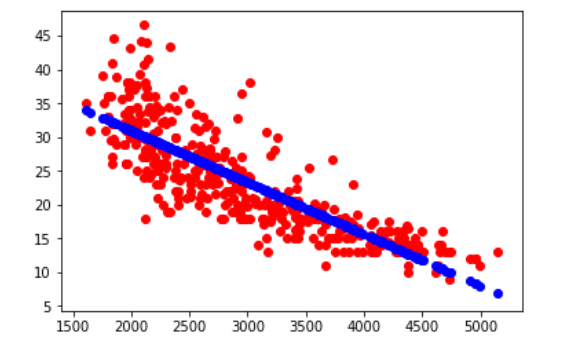

预测与实际值的分布

plt.scatter(cars["weight"], cars["mpg"], c='red') plt.scatter(cars["weight"], predictions, c='blue') plt.show()

-

均方差指标

lr = LinearRegression() lr.fit(cars[["weight"]], cars["mpg"]) predictions = lr.predict(cars[["weight"]]) from sklearn.metrics import mean_squared_error mse = mean_squared_error(cars["mpg"], predictions) print(mse) 18.780939734628397 -

均方根误差指标

mse = mean_squared_error(cars["mpg"], predictions) rmse = mse ** (0.5) print (rmse) 4.333698159150957

2 逻辑回归问题实践

-

数据集欣赏

import pandas as pd import matplotlib.pyplot as plt admissions = pd.read_csv("C:\\ML\\MLData\\admissions.csv") print (admissions.head()) plt.scatter(admissions['gpa'], admissions['admit']) plt.show() admit gpa gre 0 0 3.177277 594.102992 1 0 3.412655 631.528607 2 0 2.728097 553.714399 3 0 3.093559 551.089985 4 0 3.141923 537.184894 <Figure size 640x480 with 1 Axes> -

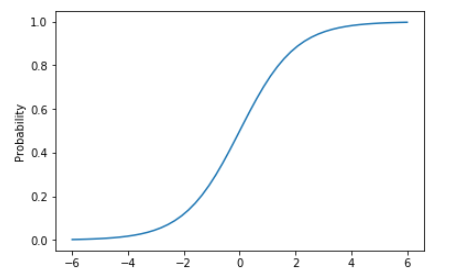

sigmod函数欣赏

import numpy as np # Logit Function def logit(x): # np.exp(x) raises x to the exponential power, ie e^x. e ~= 2.71828 return np.exp(x) / (1 + np.exp(x)) # Generate 50 real values, evenly spaced, between -6 and 6. x = np.linspace(-6,6,50, dtype=float) # Transform each number in t using the logit function. y = logit(x) # Plot the resulting data. plt.plot(x, y) plt.ylabel("Probability") plt.show()

-

逻辑回归

from sklearn.linear_model import LinearRegression linear_model = LinearRegression() linear_model.fit(admissions[["gpa"]], admissions["admit"]) from sklearn.linear_model import LogisticRegression logistic_model = LogisticRegression() logistic_model.fit(admissions[["gpa"]], admissions["admit"]) LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True, intercept_scaling=1, max_iter=100, multi_class='warn', n_jobs=None, penalty='l2', random_state=None, solver='warn', tol=0.0001, verbose=0, warm_start=False) -

逻辑回归概率值预测

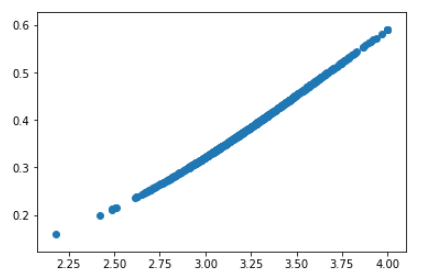

logistic_model = LogisticRegression() logistic_model.fit(admissions[["gpa"]], admissions["admit"]) pred_probs = logistic_model.predict_proba(admissions[["gpa"]]) print(pred_probs) ## pred_probs[:,1] 接收的可能性 pred_probs[:,0] 没有被接收的可能性 plt.scatter(admissions["gpa"], pred_probs[:,1]) plt.show()

-

逻辑回归分类值预测

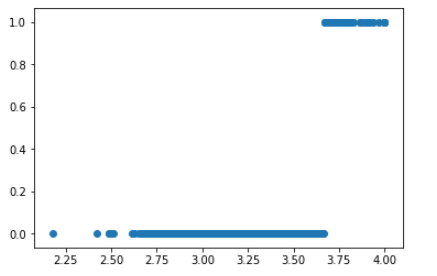

logistic_model = LogisticRegression() logistic_model.fit(admissions[["gpa"]], admissions["admit"]) fitted_labels = logistic_model.predict(admissions[["gpa"]]) plt.scatter(admissions["gpa"], fitted_labels) plt.show()

-

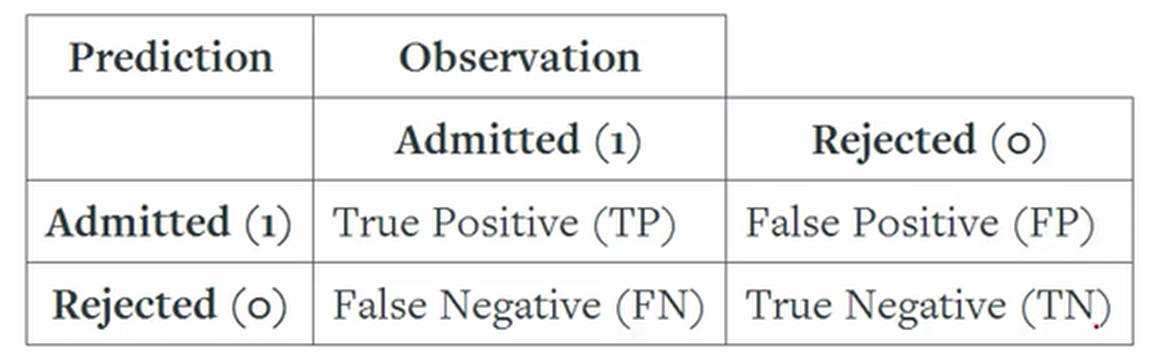

逻辑回归模型评估

-

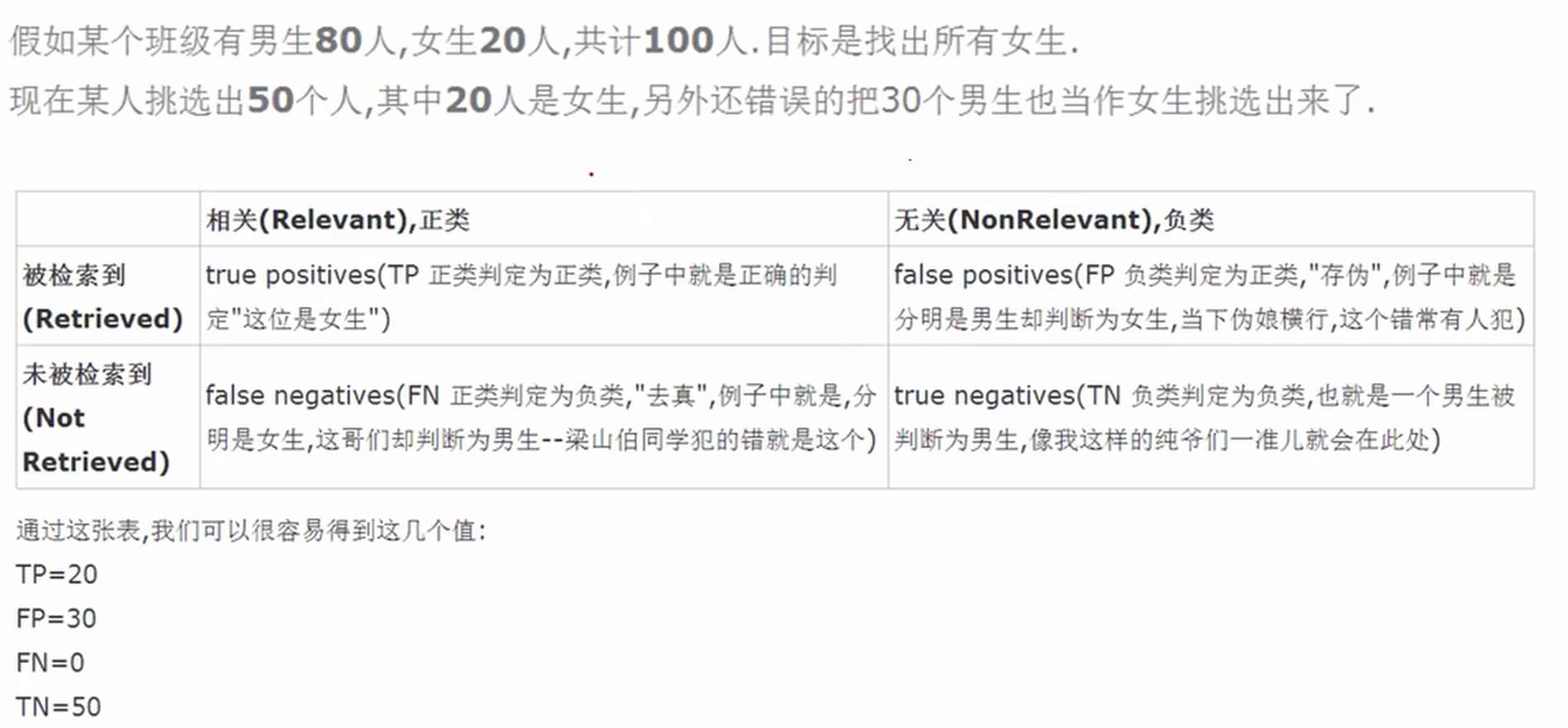

模型评估案例分析(先找目标,比如女生,它就是正例P)

-

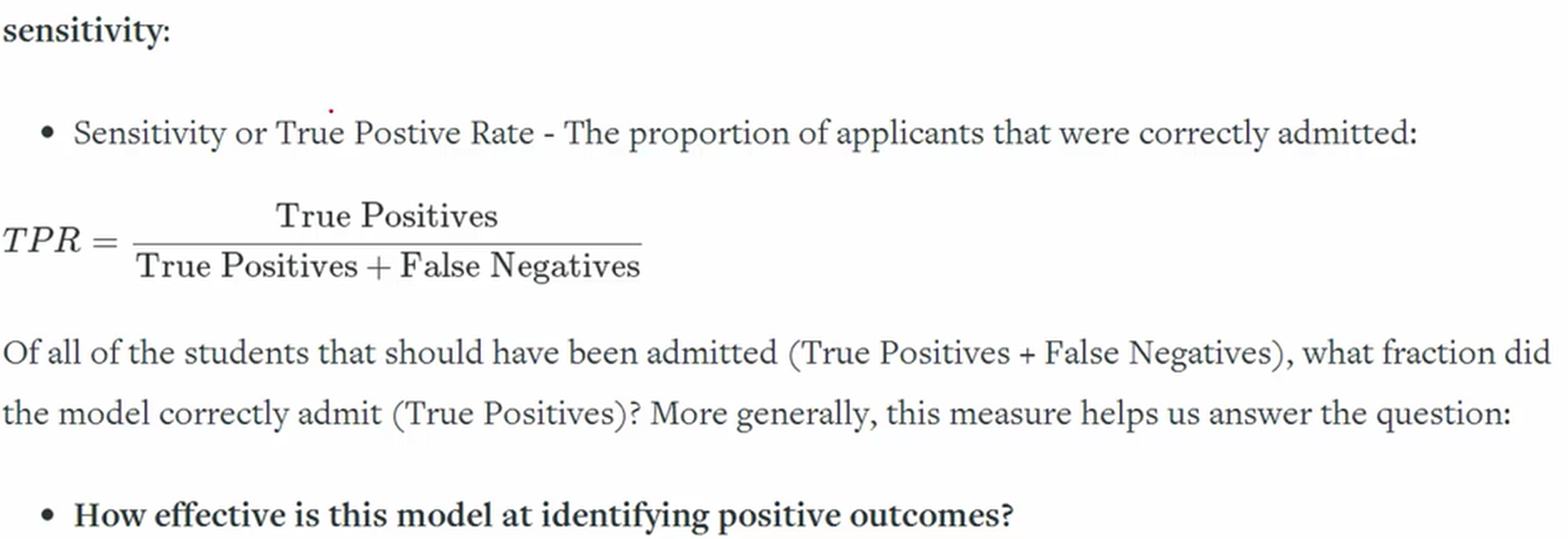

TPR 指标(召回率,不均衡的情况下,精确度是有问题的100个样本,10个异常的,及时异常全部预测正确了,也能达到90%的概率。检查正例的效果。比如在所有女生的样本下)

-

精确度

admissions["actual_label"] = admissions["admit"] matches = admissions["predicted_label"] == admissions["actual_label"] correct_predictions = admissions[matches] print(correct_predictions.head()) accuracy = len(correct_predictions) / float(len(admissions)) print(accuracy) admit gpa gre predicted_label actual_label 0 0 3.177277 594.102992 0 0 1 0 3.412655 631.528607 0 0 2 0 2.728097 553.714399 0 0 3 0 3.093559 551.089985 0 0 4 0 3.141923 537.184894 0 0 0.645962732919 -

TPR指标(检查正例的效果)

true_positive_filter = (admissions["predicted_label"] == 1) & (admissions["actual_label"] == 1) true_positives = len(admissions[true_positive_filter]) true_negative_filter = (admissions["predicted_label"] == 0) & (admissions["actual_label"] == 0) true_negatives = len(admissions[true_negative_filter]) print(true_positives) print(true_negatives) 31 385 true_positive_filter = (admissions["predicted_label"] == 1) & (admissions["actual_label"] == 1) true_positives = len(admissions[true_positive_filter]) false_negative_filter = (admissions["predicted_label"] == 0) & (admissions["actual_label"] == 1) false_negatives = len(admissions[false_negative_filter]) sensitivity = true_positives / float((true_positives + false_negatives)) print(sensitivity) 0.127049180328 -

FPR 指标(检查负例的效果)

true_positive_filter = (admissions["predicted_label"] == 1) & (admissions["actual_label"] == 1) true_positives = len(admissions[true_positive_filter]) false_negative_filter = (admissions["predicted_label"] == 0) & (admissions["actual_label"] == 1) false_negatives = len(admissions[false_negative_filter]) true_negative_filter = (admissions["predicted_label"] == 0) & (admissions["actual_label"] == 0) true_negatives = len(admissions[true_negative_filter]) false_positive_filter = (admissions["predicted_label"] == 1) & (admissions["actual_label"] == 0) false_positives = len(admissions[false_positive_filter]) specificity = (true_negatives) / float((false_positives + true_negatives)) print(specificity) 0.9625

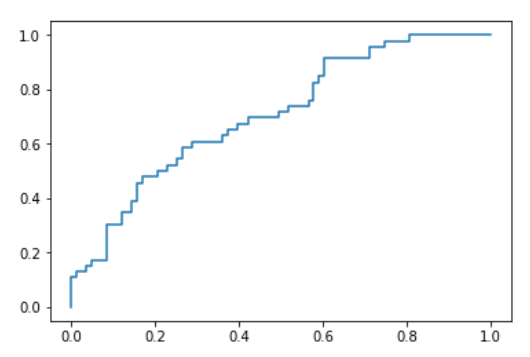

3 ROC 综合评价指标(看面积,通过不同的阈值,观察TPR及FPR都接近于1最好)

-

使用numpy来洗牌(permutation洗牌后会返回index)

import numpy as np np.random.seed(8) admissions = pd.read_csv("C:\\ML\\MLData\\admissions.csv") admissions["actual_label"] = admissions["admit"] admissions = admissions.drop("admit", axis=1) #permutation洗牌后会返回index shuffled_index = np.random.permutation(admissions.index) #print shuffled_index shuffled_admissions = admissions.loc[shuffled_index] train = shuffled_admissions.iloc[0:515] test = shuffled_admissions.iloc[515:len(shuffled_admissions)] print(shuffled_admissions.head()) gpa gre actual_label 260 3.177277 594.102992 0 173 3.412655 631.528607 0 256 2.728097 553.714399 0 167 3.093559 551.089985 0 400 3.141923 537.184894 0 -

洗牌后的准确率

shuffled_index = np.random.permutation(admissions.index) shuffled_admissions = admissions.loc[shuffled_index] train = shuffled_admissions.iloc[0:515] test = shuffled_admissions.iloc[515:len(shuffled_admissions)] model = LogisticRegression() model.fit(train[["gpa"]], train["actual_label"]) labels = model.predict(test[["gpa"]]) test["predicted_label"] = labels matches = test["predicted_label"] == test["actual_label"] correct_predictions = test[matches] accuracy = len(correct_predictions) / float(len(test)) print(accuracy) -

TPR指标和FPR指标

model = LogisticRegression() model.fit(train[["gpa"]], train["actual_label"]) labels = model.predict(test[["gpa"]]) test["predicted_label"] = labels matches = test["predicted_label"] == test["actual_label"] correct_predictions = test[matches] accuracy = len(correct_predictions) / len(test) true_positive_filter = (test["predicted_label"] == 1) & (test["actual_label"] == 1) true_positives = len(test[true_positive_filter]) false_negative_filter = (test["predicted_label"] == 0) & (test["actual_label"] == 1) false_negatives = len(test[false_negative_filter]) sensitivity = true_positives / float((true_positives + false_negatives)) print(sensitivity) false_positive_filter = (test["predicted_label"] == 1) & (test["actual_label"] == 0) false_positives = len(test[false_positive_filter]) true_negative_filter = (test["predicted_label"] == 0) & (test["actual_label"] == 0) true_negatives = len(test[true_negative_filter]) specificity = (true_negatives) / float((false_positives + true_negatives)) print(specificity) -

ROC 曲线(根据不同预测阈值进行ROC值得求解)

import matplotlib.pyplot as plt from sklearn import metrics # 看面积(综合评价指标) probabilities = model.predict_proba(test[["gpa"]]) fpr, tpr, thresholds = metrics.roc_curve(test["actual_label"], probabilities[:,1]) print(fpr) print(tpr) print (thresholds) plt.plot(fpr, tpr) plt.show() [0. 0. 0. 0.01204819 0.01204819 0.03614458 0.03614458 0.04819277 0.04819277 0.08433735 0.08433735 0.12048193 0.12048193 0.14457831 0.14457831 0.15662651 0.15662651 0.1686747 0.1686747 0.20481928 0.20481928 0.22891566 0.22891566 0.25301205 0.25301205 0.26506024 0.26506024 0.28915663 0.28915663 0.36144578 0.36144578 0.37349398 0.37349398 0.39759036 0.39759036 0.42168675 0.42168675 0.4939759 0.4939759 0.51807229 0.51807229 0.56626506 0.56626506 0.57831325 0.57831325 0.59036145 0.59036145 0.60240964 0.60240964 0.71084337 0.71084337 0.74698795 0.74698795 0.80722892 0.80722892 1. ] [0. 0.04347826 0.10869565 0.10869565 0.13043478 0.13043478 0.15217391 0.15217391 0.17391304 0.17391304 0.30434783 0.30434783 0.34782609 0.34782609 0.39130435 0.39130435 0.45652174 0.45652174 0.47826087 0.47826087 0.5 0.5 0.52173913 0.52173913 0.54347826 0.54347826 0.58695652 0.58695652 0.60869565 0.60869565 0.63043478 0.63043478 0.65217391 0.65217391 0.67391304 0.67391304 0.69565217 0.69565217 0.7173913 0.7173913 0.73913043 0.73913043 0.76086957 0.76086957 0.82608696 0.82608696 0.84782609 0.84782609 0.91304348 0.91304348 0.95652174 0.95652174 0.97826087 0.97826087 1. 1. ] [1.56004565 0.56004565 0.54243179 0.53628712 0.51105566 0.48893268 0.48527053 0.48509715 0.4813168 0.46947103 0.45346756 0.44834885 0.44706728 0.44427478 0.43934894 0.43829984 0.43663204 0.43619054 0.43403896 0.42768342 0.42552944 0.42037834 0.41902425 0.4106753 0.40808302 0.40553849 0.40263935 0.39614129 0.39051837 0.3829498 0.38157447 0.38068362 0.38048866 0.37569719 0.37508847 0.37197763 0.36994072 0.36246461 0.36217119 0.36104391 0.36079299 0.3545887 0.354584 0.35222722 0.35015605 0.34812221 0.34735443 0.34725692 0.34146873 0.32738061 0.32312995 0.32262176 0.32259245 0.3058184 0.30278689 0.19267703]

-

综合评分的给出

from sklearn.metrics import roc_auc_score probabilities = model.predict_proba(test[["gpa"]]) # Means we can just use roc_auc_curve() instead of metrics.roc_auc_curve() #求面积 auc_score = roc_auc_score(test["actual_label"], probabilities[:,1]) print(auc_score) 0.7210690192008302

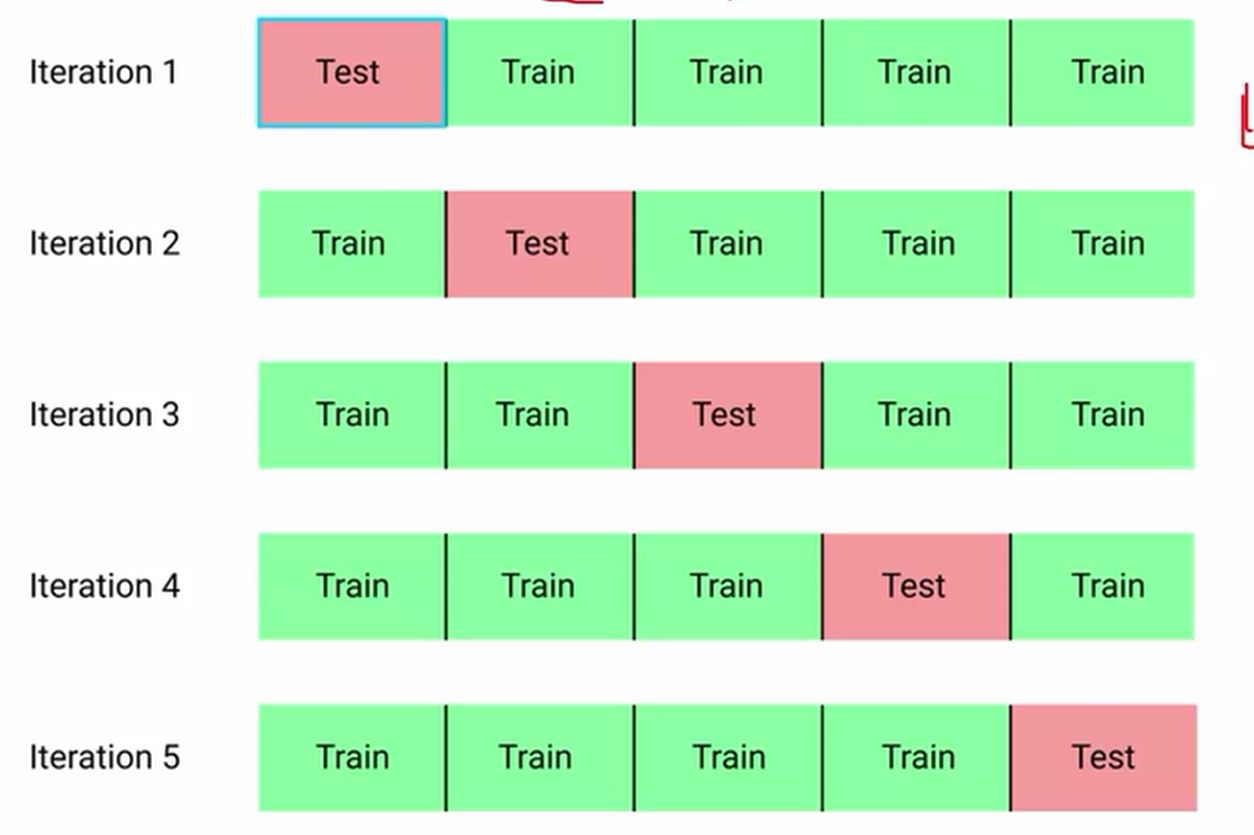

4 交叉验证

-

手写交叉验证

import pandas as pd import numpy as np admissions = pd.read_csv("C:\\ML\\MLData\\admissions.csv") admissions["actual_label"] = admissions["admit"] admissions = admissions.drop("admit", axis=1) shuffled_index = np.random.permutation(admissions.index) shuffled_admissions = admissions.loc[shuffled_index] admissions = shuffled_admissions.reset_index() admissions.loc[0:128, "fold"] = 1 admissions.loc[129:257, "fold"] = 2 admissions.loc[258:386, "fold"] = 3 admissions.loc[387:514, "fold"] = 4 admissions.loc[515:644, "fold"] = 5 # Ensure the column is set to integer type. admissions["fold"] = admissions["fold"].astype('int') print(admissions.head()) print(admissions.tail()) index gpa gre actual_label fold 0 510 3.177277 594.102992 0 1 1 129 3.412655 631.528607 0 1 2 208 2.728097 553.714399 0 1 3 32 3.093559 551.089985 0 1 4 420 3.141923 537.184894 0 1 index gpa gre actual_label fold 639 306 3.381359 720.718438 1 5 640 376 3.083956 556.918021 1 5 641 486 3.114419 734.297679 1 5 642 296 3.549012 604.697503 1 5 643 449 3.532753 588.986175 1 5 -

交叉案例

from sklearn.linear_model import LogisticRegression # Training model = LogisticRegression() train_iteration_one = admissions[admissions["fold"] != 1] test_iteration_one = admissions[admissions["fold"] == 1] model.fit(train_iteration_one[["gpa"]], train_iteration_one["actual_label"]) # Predicting labels = model.predict(test_iteration_one[["gpa"]]) test_iteration_one["predicted_label"] = labels matches = test_iteration_one["predicted_label"] == test_iteration_one["actual_label"] correct_predictions = test_iteration_one[matches] iteration_one_accuracy = len(correct_predictions) / float(len(test_iteration_one)) print(iteration_one_accuracy) 0.6744186046511628 -

交叉预测

import numpy as np fold_ids = [1,2,3,4,5] def train_and_test(df, folds): fold_accuracies = [] for fold in folds: model = LogisticRegression() train = admissions[admissions["fold"] != fold] test = admissions[admissions["fold"] == fold] model.fit(train[["gpa"]], train["actual_label"]) labels = model.predict(test[["gpa"]]) test["predicted_label"] = labels matches = test["predicted_label"] == test["actual_label"] correct_predictions = test[matches] fold_accuracies.append(len(correct_predictions) / float(len(test))) return(fold_accuracies) accuracies = train_and_test(admissions, fold_ids) print(accuracies) average_accuracy = np.mean(accuracies) print(average_accuracy) [0.6744186046511628, 0.7209302325581395, 0.8372093023255814, 0.1015625, 0.0] 0.46682412790697675 -

roc_auc 交叉验证综合预测结果

from sklearn.model_selection import KFold from sklearn.model_selection import cross_val_score admissions = pd.read_csv("C:\\ML\\MLData\\admissions.csv") admissions["actual_label"] = admissions["admit"] admissions = admissions.drop("admit", axis=1) lr = LogisticRegression() #roc_auc accuracies = cross_val_score(lr, admissions[["gpa"]], admissions["actual_label"], scoring="roc_auc", cv=3) average_accuracy = sum(accuracies) / len(accuracies) print(accuracies) print(average_accuracy)

5 多分类问题- 二分类转换为三分类问题

-

onehot编码(针对气缸cylinders种类)

import pandas as pd import matplotlib.pyplot as plt columns = ["mpg", "cylinders", "displacement", "horsepower", "weight", "acceleration", "year", "origin", "car name"] cars = pd.read_table("C:\\ML\\MLData\\auto-mpg.data", delim_whitespace=True, names=columns) print(cars.head(5)) print(cars.tail(5)) mpg cylinders displacement horsepower weight acceleration year \ 0 18.0 8 307.0 130.0 3504.0 12.0 70 1 15.0 8 350.0 165.0 3693.0 11.5 70 2 18.0 8 318.0 150.0 3436.0 11.0 70 3 16.0 8 304.0 150.0 3433.0 12.0 70 4 17.0 8 302.0 140.0 3449.0 10.5 70 origin car name 0 1 chevrolet chevelle malibu 1 1 buick skylark 320 2 1 plymouth satellite 3 1 amc rebel sst 4 1 ford torino mpg cylinders displacement horsepower weight acceleration year \ 393 27.0 4 140.0 86.00 2790.0 15.6 82 394 44.0 4 97.0 52.00 2130.0 24.6 82 395 32.0 4 135.0 84.00 2295.0 11.6 82 396 28.0 4 120.0 79.00 2625.0 18.6 82 397 31.0 4 119.0 82.00 2720.0 19.4 82 origin car name 393 1 ford mustang gl 394 2 vw pickup 395 1 dodge rampage 396 1 ford ranger 397 1 chevy s-10 -

get_dummies获取所有类别的编码,并废弃原特征

dummy_cylinders = pd.get_dummies(cars["cylinders"], prefix="cyl") #print dummy_cylinders cars = pd.concat([cars, dummy_cylinders], axis=1) print(cars.head()) dummy_years = pd.get_dummies(cars["year"], prefix="year") #print dummy_years cars = pd.concat([cars, dummy_years], axis=1) cars = cars.drop("year", axis=1) cars = cars.drop("cylinders", axis=1) print(cars.head()) mpg cylinders displacement horsepower weight acceleration year \ 0 18.0 8 307.0 130.0 3504.0 12.0 70 1 15.0 8 350.0 165.0 3693.0 11.5 70 2 18.0 8 318.0 150.0 3436.0 11.0 70 3 16.0 8 304.0 150.0 3433.0 12.0 70 4 17.0 8 302.0 140.0 3449.0 10.5 70 origin car name cyl_3 cyl_4 cyl_5 cyl_6 cyl_8 0 1 chevrolet chevelle malibu 0 0 0 0 1 1 1 buick skylark 320 0 0 0 0 1 2 1 plymouth satellite 0 0 0 0 1 3 1 amc rebel sst 0 0 0 0 1 4 1 ford torino 0 0 0 0 1 mpg displacement horsepower weight acceleration origin \ 0 18.0 307.0 130.0 3504.0 12.0 1 1 15.0 350.0 165.0 3693.0 11.5 1 2 18.0 318.0 150.0 3436.0 11.0 1 3 16.0 304.0 150.0 3433.0 12.0 1 4 17.0 302.0 140.0 3449.0 10.5 1 car name cyl_3 cyl_4 cyl_5 ... year_73 year_74 \ 0 chevrolet chevelle malibu 0 0 0 ... 0 0 1 buick skylark 320 0 0 0 ... 0 0 2 plymouth satellite 0 0 0 ... 0 0 3 amc rebel sst 0 0 0 ... 0 0 4 ford torino 0 0 0 ... 0 0 year_75 year_76 year_77 year_78 year_79 year_80 year_81 year_82 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 4 0 0 0 0 0 0 0 0 [5 rows x 25 columns] -

数据混洗

import numpy as np shuffled_rows = np.random.permutation(cars.index) shuffled_cars = cars.iloc[shuffled_rows] highest_train_row = int(cars.shape[0] * .70) train = shuffled_cars.iloc[0:highest_train_row] test = shuffled_cars.iloc[highest_train_row:] from sklearn.linear_model import LogisticRegression unique_origins = cars["origin"].unique() unique_origins.sort() models = {} features = [c for c in train.columns if c.startswith("cyl") or c.startswith("year")] -

unique_origins具有三个类别,得到三个模型

for origin in unique_origins: model = LogisticRegression() X_train = train[features] y_train = train["origin"] == origin model.fit(X_train, y_train) models[origin] = model testing_probs = pd.DataFrame(columns=unique_origins) print (testing_probs) #三分类预测 for origin in unique_origins: # Select testing features. X_test = test[features] # Compute probability of observation being in the origin. testing_probs[origin] = models[origin].predict_proba(X_test)[:,1] print (testing_probs) Empty DataFrame Columns: [1, 2, 3] Index: [] 1 2 3 0 0.562520 0.139051 0.311761 1 0.344396 0.305419 0.340340 2 0.562520 0.139051 0.311761 3 0.845885 0.039920 0.136193 4 0.344396 0.305419 0.340340 5 0.356995 0.251510 0.382683 6 0.962674 0.024810 0.034025 7 0.962987 0.036239 0.022234 8 0.945593 0.035397 0.035385 9 0.871548 0.076721 0.061084 10 0.962229 0.020634 0.039798 11 0.272269 0.326451 0.392319

6 总结

管中窥豹,虽然基础,却道明问题,正是本文的目的。

秦凯新 于深圳