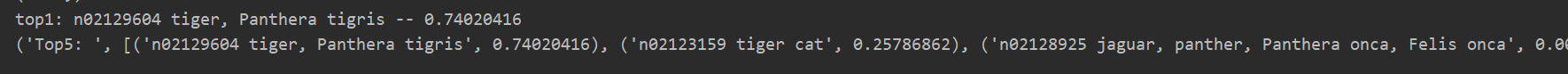

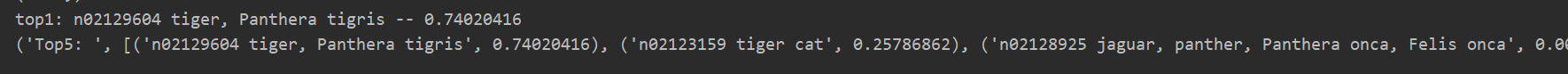

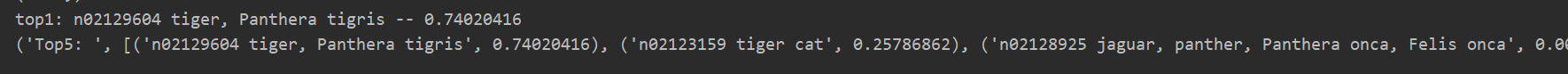

预测图片

一、Pytorch实现VGG16模型

import numpy as np

import torch

import torch.nn as nn

import cv2

from torchvision.models import vgg16

from torchvision import transforms

from PIL import Image

cfgs = [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M']

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

def make_layers():

layers = []

in_channel = 3

for cfg in cfgs:

if cfg == 'M':

layers += [nn.MaxPool2d(kernel_size=(2, 2), stride=(2, 2))]

else:

conv2d = nn.Conv2d(in_channels=in_channel, out_channels=cfg, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(inplace=True)]

in_channel = cfg

return nn.Sequential(*layers)

class VGGNet(nn.Module):

def __init__(self):

super(VGGNet, self).__init__()

self.features = make_layers() #创建卷积层

self.classifier = nn.Sequential( #创建全连接层

nn.Linear(in_features=512 * 7 *7, out_features = 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 1000)

)

def forward(self, input): #前向传播过程

feature = self.features(input)

linear_input = torch.flatten(feature, 1)

out_put = self.classifier(linear_input)

return out_put

#对图像的预处理(固定尺寸到224, 转换成touch数据, 归一化)

tran = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

if __name__ == '__main__':

# image = cv2.imread("car.jpg")

# image = cv2.resize(src=image, dsize=(224, 224))

# image = image.reshape(1, 224, 224, 3)

# image = torch.from_numpy(image)

# image = image.type(torch.FloatTensor)

# image = image.permute(0,3,1,2)

image = Image.open("tiger.jpeg")

image = tran(image)

image = torch.unsqueeze(image, dim=0)

net = VGGNet()

net = net.to(device)

image = image.to(device)

net.load_state_dict(torch.load("vgg16-397923af.pth")) #加载pytorch中训练好的模型参数

net.eval()

output = net(image)

test, prop = torch.max(output, 1)

synset = [l.strip() for l in open("synset.txt").readlines()]

print("top1:",synset[prop.item()])

preb_index = torch.argsort(output, dim=1, descending=True)[0]

top5 = [(synset[preb_index[i]], output[0][preb_index[i]].item()) for i in range(5)]

print(("Top5: ", top5))

二、Keras实现VGG16模型

from keras.layers import Input, Conv2D, MaxPool2D, Flatten, Dense

from keras.models import Model

import cv2

import numpy as np

def VGG16(input):

# Block 1

X = Conv2D(filters=64, kernel_size=(3, 3), activation="relu",

padding="same", name="block1_conv1")(input)

X = Conv2D(filters=64, kernel_size=(3, 3), activation="relu",

padding="same", name="block1_conv2")(X)

X = MaxPool2D(pool_size=(2, 2), strides=(2, 2), name="block1_pool")(X)

# Block 2

X = Conv2D(filters=128, kernel_size=(3, 3), activation="relu",

padding="same", name="block2_conv1")(X)

X = Conv2D(filters=128, kernel_size=(3, 3), activation="relu",

padding="same", name="block2_conv2")(X)

X = MaxPool2D(pool_size=(2, 2), strides=(2, 2), name="block2_pool")(X)

# Block 3

X = Conv2D(filters=256, kernel_size=(3, 3), activation="relu",

padding="same", name="block3_conv1")(X)

X = Conv2D(filters=256, kernel_size=(3, 3), activation="relu",

padding="same", name="block3_conv2")(X)

X = Conv2D(filters=256, kernel_size=(3, 3), activation="relu",

padding="same", name="block3_conv3")(X)

X = MaxPool2D(pool_size=(2, 2), strides=(2, 2), name="block3_pool")(X)

# Block 4

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block4_conv1")(X)

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block4_conv2")(X)

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block4_conv3")(X)

X = MaxPool2D(pool_size=(2, 2), strides=(2, 2), name="block4_pool")(X)

# Block 5

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block5_conv1")(X)

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block5_conv2")(X)

X = Conv2D(filters=512, kernel_size=(3, 3), activation="relu",

padding="same", name="block5_conv3")(X)

X = MaxPool2D(pool_size=(2, 2), strides=(2, 2), name="block5_pool")(X)

print(X.shape)

X = Flatten(name='flatten')(X)

X = Dense(4096, activation='relu', name='fc1')(X)

X = Dense(4096, activation='relu', name='fc2')(X)

X = Dense(1000, activation='softmax', name='predictions')(X)

return X

def predict(prob, file_path):

synset = [l.strip() for l in open(file_path).readlines()]

print(len(synset))

preb_index = np.argsort(prob)[::-1]

top1 = synset[preb_index[0]]

print("top1:",top1,"--",prob[preb_index[0]])

top5 = [(synset[preb_index[i]], prob[preb_index[i]]) for i in range(5)]

print(("Top5: ", top5))

return top1

if __name__ == '__main__':

image = cv2.imread("car.jpg")

image = cv2.resize(src=image, dsize=(224, 224))

image = image.reshape(1, 224, 224, 3)

input = Input(shape=(224, 224, 3))

vgg_output = VGG16(input)

model = Model(input, vgg_output)

model.load_weights("vgg16_weights_tf_dim_ordering_tf_kernels.h5", by_name=True)

prob = model.predict(image)

print(prob, prob.shape)

predict(prob[0], "synset.txt")

三、TensorFlow实现VGG16模型

import tensorflow as tf

import numpy as np

import time

import cv2

VGG_MEAN = [103.939, 116.779, 123.68]

class vgg16:

def __init__(self):

self.parameters = np.load("vgg16.npy", encoding='latin1').item()

def vgg_buid(self, img):

start_time = time.time()

with tf.variable_scope("preprocess") as scop:

mean = tf.constant(VGG_MEAN, dtype=tf.float32, shape=(1,1,1,3),name="imag_mean")

image = img - mean

self.conv1_1 = self.conv_layer(image, "conv1_1")

self.conv1_2 = self.conv_layer(self.conv1_1, "conv1_2")

self.pool1 = self.pool_layer(self.conv1_2, name="pool1")

self.conv2_1 = self.conv_layer(self.pool1, "conv2_1")

self.conv2_2 = self.conv_layer(self.conv2_1, "conv2_2")

self.pool2 = self.pool_layer(self.conv2_2, name="pool2")

self.conv3_1 = self.conv_layer(self.pool2, "conv3_1")

self.conv3_2 = self.conv_layer(self.conv3_1, "conv3_2")

self.conv3_3 = self.conv_layer(self.conv3_2, "conv3_3")

self.pool3 = self.pool_layer(self.conv3_3, name="pool3")

self.conv4_1 = self.conv_layer(self.pool3, "conv4_1")

self.conv4_2 = self.conv_layer(self.conv4_1, "conv4_2")

self.conv4_3 = self.conv_layer(self.conv4_2, "conv4_3")

self.pool4 = self.pool_layer(self.conv4_3, name="pool4")

self.conv5_1 = self.conv_layer(self.pool4, "conv5_1")

self.conv5_2 = self.conv_layer(self.conv5_1, "conv5_2")

self.conv5_3 = self.conv_layer(self.conv5_2, "conv5_3")

self.pool5 = self.pool_layer(self.conv5_3, name="pool5")

self.fc6 = self.fc_layer(self.pool5, name="fc6")

self.fc6_relu = tf.nn.relu(self.fc6)

self.fc7= self.fc_layer(self.fc6_relu, name="fc7")

self.fc7_relu = tf.nn.relu(self.fc7)

self.fc8 = self.fc_layer(self.fc7_relu, name="fc8")

self.prob = tf.nn.softmax(self.fc8, name="prob")

print(("build model finished: %ds" % (time.time() - start_time)))

def conv_layer(self, input, name):

with tf.variable_scope(name) as scop:

filter = self.get_conv_filter(name)

conv = tf.nn.conv2d(input, filter, strides=[1,1,1,1], padding="SAME")

bias = self.get_bias(name)

conv = tf.nn.relu(tf.nn.bias_add(conv, bias))

return conv

def pool_layer(self, input, name):

return tf.nn.max_pool(input, ksize=[1,2,2,1], strides=[1,2,2,1], padding="SAME")

def fc_layer(self, input, name):

# shape = tf.shape(input).aslist()

shape = input.get_shape().as_list()

print(shape)

dims = 1

for i in shape[1:]:

dims = dims * i

fc_input = tf.reshape(input, shape=(-1, dims))

filter = self.get_conv_filter(name)

bias = self.get_bias(name)

fc = tf.nn.bias_add(tf.matmul(fc_input, filter), bias)

return fc

def get_conv_filter(self, name):

return tf.constant(self.parameters[name][0], name = "filter")

def get_bias(self, name):

return tf.constant(self.parameters[name][1], name = "bias")

def predict(prob, file_path):

synset = [l.strip() for l in open(file_path).readlines()]

print(len(synset))

prob = prob.reshape(-1)

preb_index = np.argsort(prob)[::-1]

print(preb_index.shape)

top1 = synset[preb_index[0]]

print("top1:",top1,"--",prob[preb_index[0]])

top5 = [(synset[preb_index[i]], prob[preb_index[i]]) for i in range(5)]

print(("Top5: ", top5))

return top1

if __name__ == '__main__':

image = cv2.imread("tiger.jpeg")

image = cv2.resize(src=image, dsize=(224,224))

image = image.reshape(1,224,224,3)

input = tf.placeholder(dtype=tf.float32, shape=(None, 224, 224, 3), name= "input")

vgg = vgg16()

vgg.vgg_buid(input)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

prob_result = sess.run(vgg.prob, feed_dict={input: image})

print("prob_result.shape:",prob_result.shape)

predict(prob_result, "synset.txt")

相关完整代码以及对应不同框架下训练好的模型参数百度网盘下载,请关注我的公众号 AI计算机视觉工坊,回复【代码】获取。本公众号不定期推送机器学习,深度学习,计算机视觉等相关文章,欢迎大家和我一起学习,交流。