英文原文地址Memory optimization for feeds on Android

读后感

在Java中HashSet只能存放继承自Objcet的对象,这中情况下“基本数据类型”转化为继承自Object的(Integer、Long等)会产生很多中间Object对象,占用过多的内存,从而引发垃圾回收,造成系统卡顿。

下边是原文及翻译

Millions of people use Facebook on Android devices every day, scrolling through News Feed, Profile, Events, Pages, and Groups to interact with the people and information they care about. All of these different feed types are powered by a platform created by the Android Feed Platform team, so any optimization we make to the Feed platform could potentially improve performance across our app. We focus on scroll performance, as we want people to have a smooth experience when scrolling through their feeds.

每天有数百万的用户,在Android平台上使用Facebook,通过滑动 "新闻动态"、"用户资料"、"Events"、"Pages"和"群组"动态列表 来与他们关心的人和信息互动。所有这些不同的动态类型是由Android动态平台组提供的,因此我们对动态列表的任何优化,都有可能提高Fackbook这个app的性能。我们专注于动态列表滑动时的性能,当用户滑动动态列表时,我们希望可以为用户提供一种流畅的用户体验。

To help us achieve that, we have several automatic tools that run performance tests on the Feed platform, across different scenarios and on different devices, measuring how our code performs in runtime, memory use, frame rate, and more. One of those tools, Traceview, showed a relatively high number of calls to the Long.valueOf() function, which led to objects accumulating in memory and causing the app to stall. This post describes the problem, the potential solutions we weighed, and the set of optimizations we made to improve the Feed platform.

为了帮助我们实现这一点,我们使用了几个自动工具,在不同的设备和不同的情景下,测试动态列表滑动时候的内存使用情况、帧率等。这些测试工具中Traceview显示Long.valueof()方法的调用次数较多,使内存空闲对象迅速增加,从而影响App的运行速度。在这篇文章中,我们介绍了问题、潜在解决方案、以及在动态列表上我们做的优化。

The downside of convenience (便利化的缺陷)

After noticing the high number of calls to the Long.valueOf() function in one of our method profiling reports from Traceview, we ran further tests that confirmed that as we scrolled through News Feed, there were an unexpectedly high number of invocations for this method.

通过分析Traceview的运行报告,在注意到Long.valueof()方法被多次调用后,我们进行了进一步的测试,确认当我们滑动新闻动态时,这个方法的调用数量出乎意料地高。

When we looked at the stacktrace, we found that the function was not being called explicitly from Facebook's code but implicitly by code inserted by the compiler. This function was called when assigning(分派、设定) a primitive long value where a Long object is expected. Java supports both Object and primitive representation of the simple types (e.g., integer, long character) and provides a way to seamlessly convert between them. This feature is called autoboxing, because it “boxes” a primitive type into a corresponding Object type. While that's a convenient development feature, it creates new objects unbeknownst to the developer.

当我们查看stacktrace时,我们发现在Facebook的代码并没有直接调用该方法,而是由编译器插入的代码隐式调用的。这个函数在long转化为Long时被调用。Java提供了“基本数据类型”(例如: int、long)与继承自Object数据类型(例如: Integer、Long)转化的支持。这个特性被称为 autoboxing,它将“基本数据类型”转化为相应的对象类型。这是一个方便的开发特性,在开发者无感知的情况下,为开发者创建了一个新的对象。

And these objects add up.

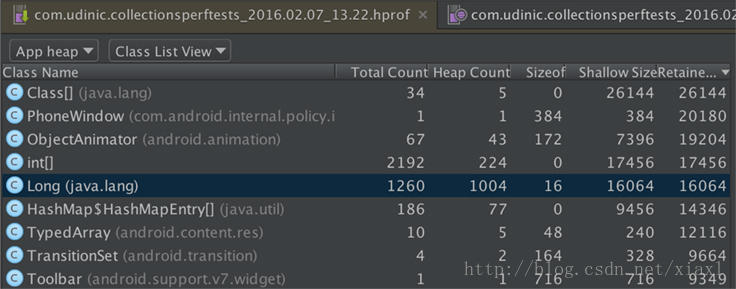

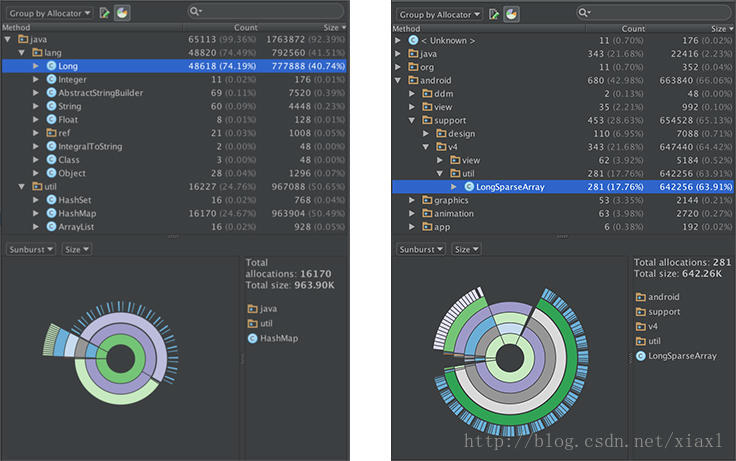

In this heap dump taken from a sample app, Long objects have a noticeable presence; while each object is not big by itself, there are so many that they occupy(占据) a large portion( 部分) of the app's memory in the heap. This is especially problematic(问题的) for devices running the Dalvik runtime. Unlike ART, which is the newer Android runtime environment, Dalvik doesn't have a generational garbage collection, known to be more optimal for handling many small objects. As we scroll through News Feed and the number of objects grows, the garbage collection will cause the app to pause and sweep(打扫) unused objects from the memory. The more objects that accumulate(积攒), the more frequently( 频繁地) the garbage collector will have to pause the app, causing it to stutter(结巴) and stall and making for a poor user experience.

截图取自一个示例App,其中Long有一个明显的调用,从图中可以看出,每个对象本身并不大,却占用了App堆内存的很大一部分空间。这肯定是有问题的。不像Android runtime(ART虚拟机)环境,Dalvik虚拟机在处理许多小对象时,并没有分代垃圾收集机制。当我们滚动 “新闻动态” 时,对象的数量迅速增长,垃圾回收机制会导致应用程序暂停并清理没有引用的对象的内存。对象积累越多,垃圾回收的次数就越频繁。进行垃圾回收时,Dalvik将暂停应用程序,导致App卡顿,造成一个很差的用户体验。

Luckily, tools such as Traceview and Allocation Tracker can help find where these calls are made. After reviewing the origins of these autoboxing occurrences, we found that the majority of them were done while inserting long values into a HashSet data structure. (We use this data structure to store hash values of News Feed stories, and later to check if a certain story's hash is already in the Set.) HashSet provides quick access to its items, an important feature allowing interaction with it as the user is scrolling through News Feed. Since the hash is calculated and stored into a primitive long variable, and our HashSet works only with objects, we get the inevitable(不可避免的) autoboxing when calling setStories.put(lStoryHash).

幸运的是,Traceview和Allocation Tracker这些工具可以帮助我们查找这些调用。回顾这些autoboxing转化,我们发现大部分的autoboxing转化发生在long插入到HashSet<Long>时。(我们用HashSet<Long>这个数据结构存储“新闻动态”数据,并通过hash查找某一个“新闻动态”数据是否在此数据结构中)。当用户滑动“新闻动态”列表时,HashSet提供快速快速的数据查找能力。HashSet只能存储继承自Object的对象,并不能存储“基本数据类型”,而我们用于计算和存储的对象是一个long型的变量,当进行setStories.put(lStoryHash)操作时,不可避免的发生long到Long的转化。

As a solution, a Set implementation( 实现) for primitive types can be used, but that turned out not to be as straightforward(简单的) as we expected.

其中一个解决方案,可以实现一种支持"“基本数据类型”数据类型"的集合,但这种解决方案并不像我们预期的那么简单。

Existing solutions (解决方案)

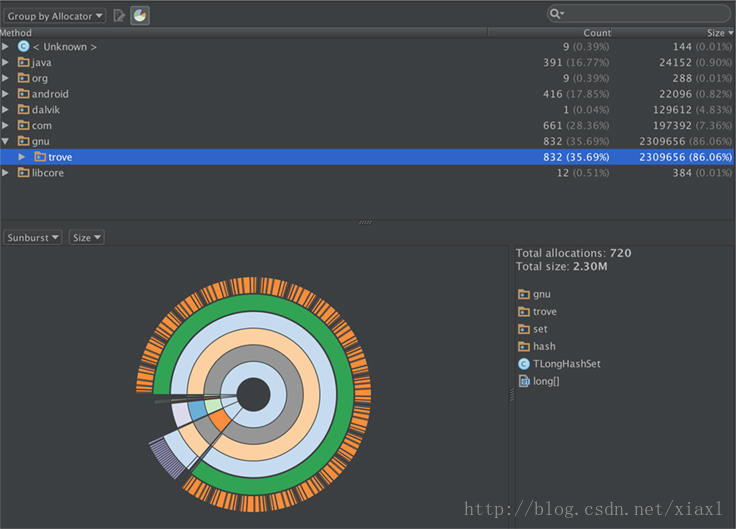

There are a few existing Java libraries that provide a Set implementation for primitive types. Almost all of these libraries were created more than 10 years ago, when the only Java running on mobile devices was J2ME. So, to determine viability, we needed to test them under Dalvik/ART and ensure they could perform well on more constrained mobile devices. We created a small testing framework to help compare these libraries with the existing HashSet and with one another. The results showed that a few of these libraries had a faster runtime than HashSet, and with fewer Long objects, but they still internally allocated a lot of objects. As an example, TLongHashSet, part of the Trove library, allocated about 2 MB worth of objects when tested with 1,000 items:

有一些现有的Java库,为"“基本数据类型”提供Set实现。几乎所有这些库创建十多年前,当时在移动设备上使用Java的设备只有J2ME。因此,为了确定是否可行,我们需要在Dalvik/ART上进行测试,确保他们可以运行在更多的移动设备上。我们构建了一个小测试框架来,来实现这些现有库与HashSet进行比较。结果表明,其中一些库相比于HashSet运行速度更快,创建的Long对象更少,但他们仍然在内存中分配了大量的对象。例如,Trove库中的TLongHashSet,1000项Items分配了约2 MB内存控件的Object对象。

Testing other libraries, such as PCJ and Colt, showed similar results.

It was possible that the existing solutions didn't fit our needs. We considered whether we could instead create a new Set implementation and optimize it for Android. Looking inside Java's HashSet, there's a relatively simple implementation using a single HashMap to do the heavy lifting.

现有的解决方案不符合我们的需要。我们考虑是否可以创建一个对Android优化的Set实现。查看Java HashSet源码实现,发现其实现相对简单,内部采用HashMap来实现。

public class HashSet<E> extends AbstractSet<E> implements Set<E>, ... {

transient HashMap<E, HashSet<E>> backingMap;

...

@Override public boolean add(E object) {

return backingMap.put(object, this) == null;

}

@Override public boolean contains(Object object) {

return backingMap.containsKey(object);

}

...

}

Adding a new item to the HashSet means adding it to the internal HashMap where the object is the key and the HashSet's instance is the value. To check object membership, HashSet checks whether its internal HashMap contains the object as a key. An alternative to HashSet could be implemented using an Android-optimized map and the same principles.

添加一个Item到HashSet意味着将此Item添加到HashMap中,在HashMap中,该Item对象做为Key存在;在HashSet中查找该Item对象时,实际是在对比HashMap 中的Key中查找是否存在该Item对象。在Android平台上的数据交换同样使用的这套准则。

Introducing(介绍) `LongArraySet`

You may already be familiar with LongSparseArray, a class in the Android Support Library that acts as a map using a primitive long as a key. Example usage:

您可能已经熟悉LongSparseArray,其存在于Android的Support Library库中,做为一个Map实现,其key为long型数据变量。使用示例:

LongSparseArray<String> longSparseArray = new LongSparseArray<>();

longSparseArray.put(3L, "Data");

String data = longSparseArray.get(3L); // the value of data is "Data"

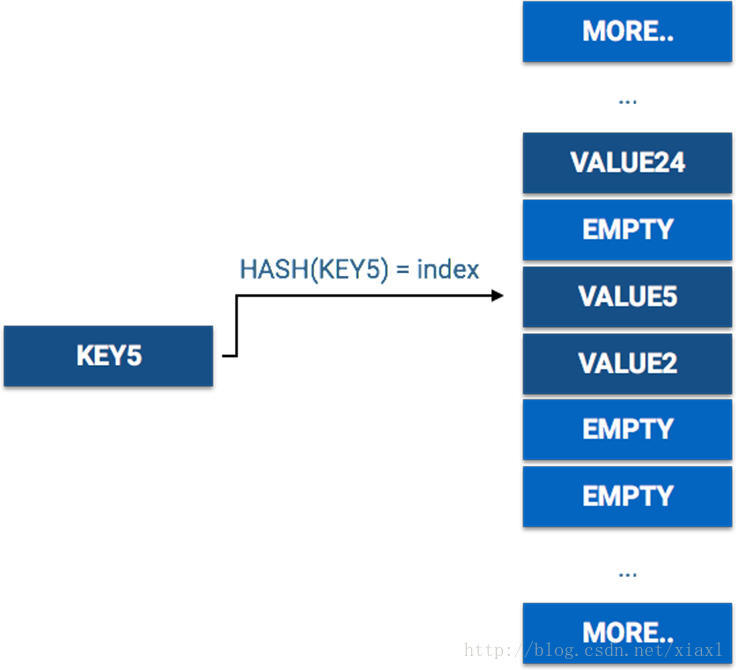

LongSparseArray works differently than HashMap, though. When calling mapHashmap.get(KEY5), this is how the value is found in the HashMap:

LongSparseArray与HashMap工作原理不同。当调用mapHashmap.get(KEY5)时,以下为HashMap查找原理:

When retrieving a value using a key on a HashMap, it's accessing the value in the array using a hash of the key as the index, a direct access in O(1). Making the same call on a LongSparseArray looks like this:

在HashMap中检索一个值时,通过其对应key的hash值进行比较,直接检索时时间复杂度为O(1)。LongSparseArray 做同样操作时:

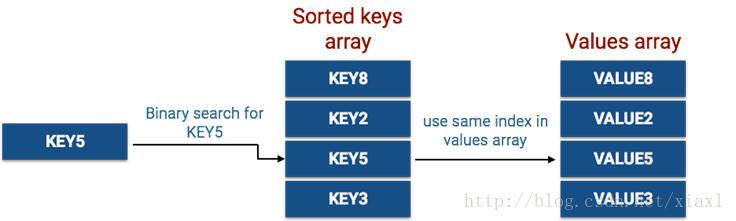

LongSparseArray searches a sorted keys array for the key's value using a binary search, an operation with runtime of O(log N). The index of the key in the array is later used to find the value in the values array.

LongSparseArray搜索某一个key时,采用二分查找算法查找存放key的数组,时间复杂度O(log N)。查找到的key的index用于在Value数组中找到对应的value。

HashMap allocates one big array in an effort to avoid collisions, which are causing the search to be slower. LongSparseArray allocates two small arrays, making its memory footprint smaller. But to support its search algorithm, LongSparseArray needs to allocate its internal arrays in consecutive memory blocks. Adding more items will require allocating new arrays when there's no more space in the current ones. The way LongSparseArray works makes it less ideal when holding more than 1,000 items, where these differences have a more significant impact on performance. (You can learn more about LongSparseArray in the official documentation and by watching this short video by Google.)

为了避免数据冲突HashMap创建了一个很大的数组,从而导致搜索速度较慢。LongSparseArray分配两个较小的数组,使其内存占用较小。但是为了支持其搜索算法,LongSparseArray需要分配连续的内存块。添加更多的Item时,当数组空间不足时,需要分配新的数组空间。LongSparseArray的工作方式,使得Item数量超过1000项时,其表现不是很理想,这些差异对性能有更重大的影响。(想了解更多关于LongSparseArray的内容,可以阅读official documentation和谷歌提供的short video ) 。

Since the LongSparseArray's keys are of a primitive long type, we can create a data structure with the same approach as HashSet but with a LongSparseArray as the internal map instead of HashMap.

由于LongSparseArray使用“基本数据类型”long做为key,我们可以用LongSparseArray替换HashSet中的HashMap,从而创建一个与HashSet相似的数据结构。

And so LongArraySet was created.

因此LongArraySet被创建出来了。

The new data structure looked promising, but the first rule of optimization is “always measure.” By using the same testing framework from earlier, we compared the new data structure with HashSet. Each data structure was tested by adding X number of items, checking the existence of each item, and later removing all of them. We ran tests using different numbers of items (X=10, X=100, X=1,000 …) and averaged the time it took to complete each operation per item.

新的数据结构看起来是很有前景的,但是最优的解决方案总是在不断的权衡的。通过之间介绍的小测试程序,我们把新的数据结构与HashSet进行了对比,对每一种数据结构进行添加X条数据、检测Item数据存在情况和移除数据测试。我们测试使用不同数量的Item(X = 10、X = 100、X = 1000…),并计算对应操作的平均时间。

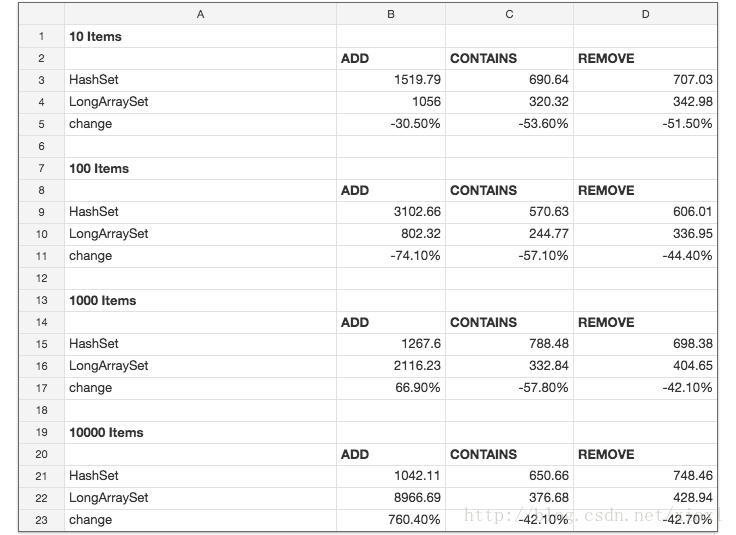

The runtime results (time shown is in nanoseconds):

测试结果(单位:纳秒)

We saw a runtime improvement for the contains and remove methods using the new data structure. Also, as the number of items increased in the array set, it took more time to add new items. That's consistent with what we already knew about LongSparseArray — it doesn't perform as well as HashMap when the number of items is more than 1,000. In our use cases, we're dealing with only hundreds of items, so this is a trade-off we're willing to make.

我们看到使用新的数据结构后,contains与remove操作性能的显著提升。同时,随着item数量的增加,需要花更多的时间去添加一个新的item。这符合我们对于 LongSparseArray中item超过1000后的操作表现预期。在我们的案例中,我们仅仅要处理数百条的数据,因此经过权衡,我们将采用这种方案。

We also saw a big improvement related to memory. In reviewing the heap dumps and Allocation Tracker reports, we noticed a decrease in object allocations. Here's a side-by-side allocation report for the HashSet and LongArraySet implementations, when adding 1,000 items for 20 iterations:

我们还看到一个内存的重大提升。回顾heap dumps和Allocation Tracker报告,我们注意到对象分配的减少。以下添加1000条数据时,HashSet和LongArraySet的内存分配报告。

In addition to avoiding all the Long object allocations, LongSparseArray was more memory-efficient internally, with about 30 percent fewer allocations in this scenario.

避免了Long对象占用内存,在这种情景下,LongSparseArray减少了30%的内存占用。

Conclusion 结论

By understanding how other data structures work, we were able to create a more optimized data structure for our needs. The less the garbage collector has to work, the lower the likelihood of dropped frames. Using the new LongArraySet class, and a similar IntArraySet for the primitive int data type, we were able to cut down a significant number of allocations in our entire app.

通过了解数据结构的工作原理,我们可以创建一个满足我们要求的更优的数据结构。垃圾回收的次数越少,对帧率的影响就越小。使用LongArraySet和IntArraySet ,我们可以减少整个应用程序中,对象的内存分配。

This case study demonstrates the importance of measuring our options to ensure that we were introducing an improvement. We also accepted that while this solution is not perfect for all use cases, since this implementation is slower for very large data sets, it was an acceptable trade-off for us to optimize our code.

这个学习案例展示了,为了确保我们引入改进的可行,不断权衡的重要性。对于这个方案并不适用于所有的情况,这点我们是可以接收的,虽然当数据量很大时,这个方案的效率是很差的,但是这是一个我们可以接受的折衷优化方案。

You can find the source code for the two data structures here. We are excited to continue working on challenges and optimizing our Feed platform and to share our solutions with the community.

从这里可以下载LongArraySet 与IntArraySet的代码。

如果打不开,可以从以下连接获取源码:

http://blog.csdn.net/xiaxl/article/details/72730959

本人英语水平较烂,如有翻译错误之处,请指出,我会积极修正,谢谢大家