相信大家都对直播不会陌生,直播的技术也越来越成熟了,目前有这样的一个技术,当弹幕飘到主播的脸上的时候,弹幕会自动消失,出了人脸范围内,就继续显示出来。这个原理非常的简单,其实就是人脸识别,将人脸识别范围内的弹幕全都隐藏。说起来容易做起来难,本文将分以下几点讲述如何实现RTMP视频流的人脸识别。

- 方案选择

- ffmpeg的接入

- ffmpeg的数据解析

- OpenGL的数据绘制

- 人脸跟踪以及人脸框的绘制

一、方案的选择

笔者一开始想直接使用别人封装好的播放器,输入地址就能播放。接入后发现,确实接入和使用都很简单,也能够显示,但是有一个很致命的问题,就是没有提供获取裸数据的接口,因而没办法进行人脸识别,后面我就转用了ffmpeg。当然如果只是想在设备上播放RTMP流,bilibili的ijkplayer的框架是完全没有问题的,接入和使用都很简单下面是他们的地址。

解析方案已经选择完毕,接下来就是绘制和人脸识别,绘制我采用OpenGL。原因是之前有自己封装过一个自定义surfaceView,直接拿来用就可以了。人脸识别引擎我选择虹软的引擎,原因有二,一是使用起来比较简单,虹软的demo写的不错,很多东西可以直接抄过来;二是免费,其他公司的引擎我也用过,都是有试用期限,我不喜欢有期限的东西,而且虹软的引擎效果也不错,当然是我的首选。

二、ffmpeg的接入

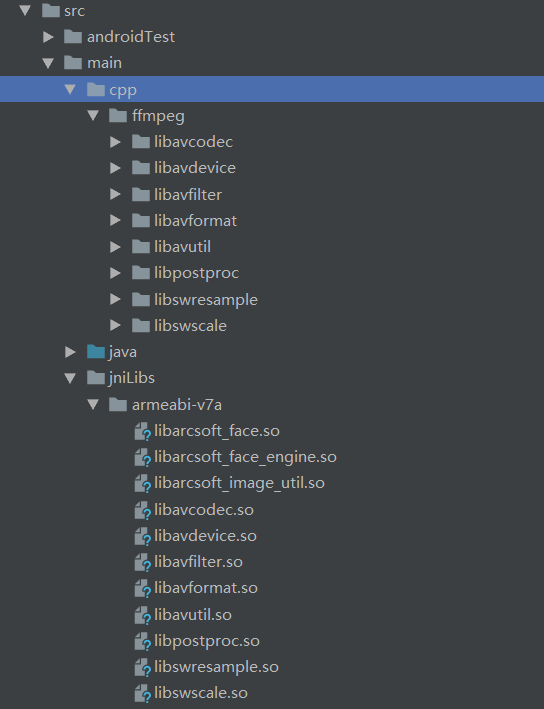

1.目录结构

在src/main目录下新建cpp以及jniLibs目录,并将ffmpeg库放入,如下图所示。

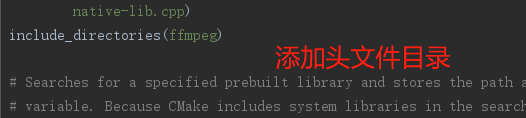

2.CMakeLists

首先我们在src/main/cpp目录下新建两个文件,CMakeLists.txt,rtmpplayer-lib。CMake用于库间文件的管理与构建,rtmpplayer-lib是放我们解析数据流的jni代码用的。

CMakeLists.txt

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.4.1)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds them for you.

# Gradle automatically packages shared libraries with your APK.

add_library( # Sets the name of the library.

rtmpplayer-lib

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

rtmpplayer-lib.cpp)

include_directories(ffmpeg)

# Searches for a specified prebuilt library and stores the path as a

# variable. Because CMake includes system libraries in the search path by

# default, you only need to specify the name of the public NDK library

# you want to add. CMake verifies that the library exists before

# completing its build.

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log)

# Specifies libraries CMake should link to your target library. You

# can link multiple libraries, such as libraries you define in this

# build script, prebuilt third-party libraries, or system libraries.

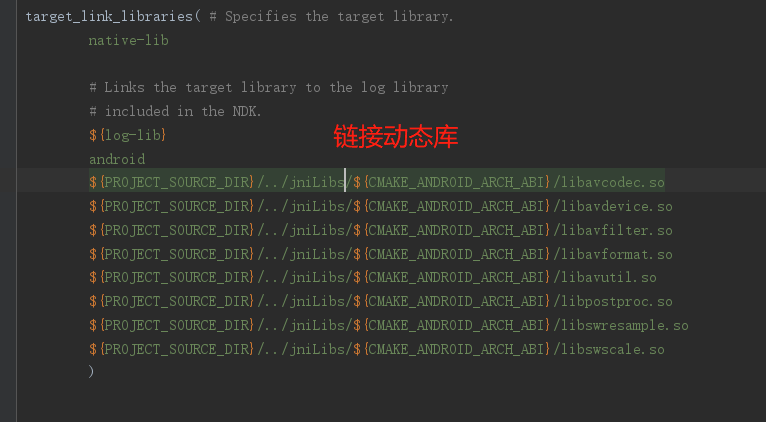

target_link_libraries( # Specifies the target library.

rtmpplayer-lib

# Links the target library to the log library

# included in the NDK.

${log-lib}

android

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libavcodec.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libavdevice.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libavfilter.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libavformat.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libavutil.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libpostproc.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libswresample.so

${PROJECT_SOURCE_DIR}/../jniLibs/${CMAKE_ANDROID_ARCH_ABI}/libswscale.so

)

3.build.gradle

我们需要在指定上面我们的CMake文件的位置,以及指定构建的架构。

android{

defaultConfig {

...

...

externalNativeBuild {

cmake {

abiFilters "armeabi-v7a"

}

}

ndk {

abiFilters 'armeabi-v7a' //只生成armv7的so

}

}

externalNativeBuild {

cmake {

//path即为上面CMakeLists的地址

path "src/main/cpp/CMakeLists.txt"

version "3.10.2"

}

}

}

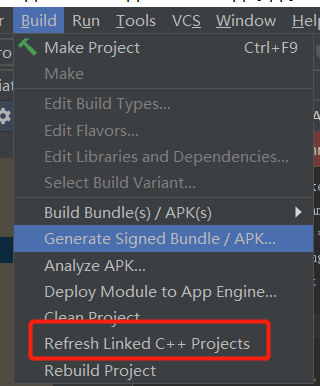

4.完成构建

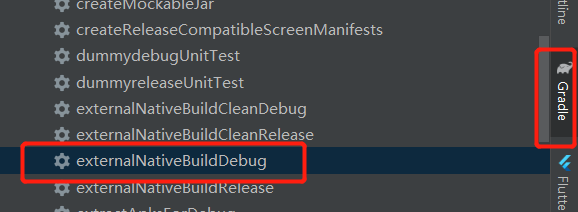

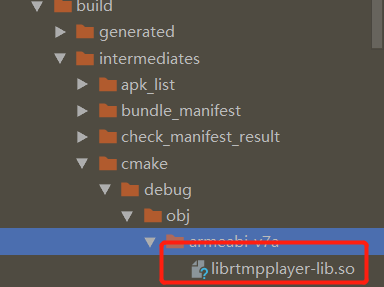

在上述的步骤都完成后,我们就可以构建了,点击build下的refresh linked C++ prject,再点击右侧Gradle/other/externalNativeBuildDebug,等待构建完成后就可以在build/intermediates/cmake下就可以看到自己构建成功的so库了,如果能看到libnative-lib.so那么恭喜你,ffmpeg接入就算完成了。

三、ffmpeg的数据解析

1.JNI数据流解析

上面提到了native-lib.cpp,我们要在这个文件内编写解析RTMP数据流的Jni代码。

#include <jni.h>

#include <string>

#include <android/log.h>

#include <fstream>

#define LOGE(FORMAT, ...) __android_log_print(ANDROID_LOG_ERROR, "player", FORMAT, ##__VA_ARGS__);

#define LOGI(FORMAT, ...) __android_log_print(ANDROID_LOG_INFO, "player", FORMAT, ##__VA_ARGS__);

extern "C" {

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libswscale/swscale.h"

#include "libavutil/imgutils.h"

#include "libavdevice/avdevice.h"

}

static AVPacket *pPacket;

static AVFrame *pAvFrame, *pFrameNv21;

static AVCodecContext *pCodecCtx;

struct SwsContext *pImgConvertCtx;

static AVFormatContext *pFormatCtx;

uint8_t *v_out_buffer;

jobject frameCallback = NULL;

bool stop;

extern "C"

JNIEXPORT jint JNICALL

Java_com_example_rtmpplaydemo_RtmpPlayer_nativePrepare(JNIEnv *env, jobject, jstring url) {

// 初始化

#if LIBAVCODEC_VERSION_INT < AV_VERSION_INT(55, 28, 1)

#define av_frame_alloc avcodec_alloc_frame

#endif

if (frameCallback == NULL) {

return -1;

}

//申请空间

pAvFrame = av_frame_alloc();

pFrameNv21 = av_frame_alloc();

const char* temporary = env->GetStringUTFChars(url,NULL);

char input_str[500] = {0};

strcpy(input_str,temporary);

env->ReleaseStringUTFChars(url,temporary);

//注册库中所有可用的文件格式和编码器

avcodec_register_all();

av_register_all();

avformat_network_init();

avdevice_register_all();

pFormatCtx = avformat_alloc_context();

int openInputCode = avformat_open_input(&pFormatCtx, input_str, NULL, NULL);

LOGI("openInputCode = %d", openInputCode);

if (openInputCode < 0)

return -1;

avformat_find_stream_info(pFormatCtx, NULL);

int videoIndex = -1;

//遍历各个流,找到第一个视频流,并记录该流的编码信息

for (unsigned int i = 0; i < pFormatCtx->nb_streams; i++)

{

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

//这里获取到的videoindex的结果为1.

videoIndex = i;

break;

}

}

if (videoIndex == -1) {

return -1;

}

pCodecCtx = pFormatCtx->streams[videoIndex]->codec;

AVCodec *pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

avcodec_open2(pCodecCtx, pCodec, NULL);

int width = pCodecCtx->width;

int height = pCodecCtx->height;

LOGI("width = %d , height = %d", width, height);

int numBytes = av_image_get_buffer_size(AV_PIX_FMT_NV21, width, height, 1);

v_out_buffer = (uint8_t *) av_malloc(numBytes * sizeof(uint8_t));

av_image_fill_arrays(pFrameNv21->data, pFrameNv21->linesize, v_out_buffer, AV_PIX_FMT_NV21,

width,

height, 1);

pImgConvertCtx = sws_getContext(

pCodecCtx->width, //原始宽度

pCodecCtx->height, //原始高度

pCodecCtx->pix_fmt, //原始格式

pCodecCtx->width, //目标宽度

pCodecCtx->height, //目标高度

AV_PIX_FMT_NV21, //目标格式

SWS_FAST_BILINEAR, //选择哪种方式来进行尺寸的改变,关于这个参数,可以参考:http://www.cnblogs.com/mmix2009/p/3585524.html

NULL,

NULL,

NULL);

pPacket = (AVPacket *) av_malloc(sizeof(AVPacket));

//onPrepared 回调

jclass clazz = env->GetObjectClass(frameCallback);

jmethodID onPreparedId = env->GetMethodID(clazz, "onPrepared", "(II)V");

env->CallVoidMethod(frameCallback, onPreparedId, width, height);

env->DeleteLocalRef(clazz);

return videoIndex;

}

extern "C"

JNIEXPORT void JNICALL

Java_com_example_rtmpplaydemo_RtmpPlayer_nativeStop(JNIEnv *env, jobject) {

//停止播放

stop = true;

if (frameCallback == NULL) {

return;

}

jclass clazz = env->GetObjectClass(frameCallback);

jmethodID onPlayFinishedId = env->GetMethodID(clazz, "onPlayFinished", "()V");

//发送onPlayFinished 回调

env->CallVoidMethod(frameCallback, onPlayFinishedId);

env->DeleteLocalRef(clazz);

//释放资源

sws_freeContext(pImgConvertCtx);

av_free(pPacket);

av_free(pFrameNv21);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_example_rtmpplaydemo_RtmpPlayer_nativeSetCallback(JNIEnv *env, jobject,

jobject callback) {

//设置回调

if (frameCallback != NULL) {

env->DeleteGlobalRef(frameCallback);

frameCallback = NULL;

}

frameCallback = (env)->NewGlobalRef(callback);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_example_rtmpplaydemo_RtmpPlayer_nativeStart(JNIEnv *env, jobject) {

//开始播放

stop = false;

if (frameCallback == NULL) {

return;

}

// 读取数据包

int count = 0;

while (!stop) {

if (av_read_frame(pFormatCtx, pPacket) >= 0) {

//解码

int gotPicCount = 0;

int decode_video2_size = avcodec_decode_video2(pCodecCtx, pAvFrame, &gotPicCount,

pPacket);

LOGI("decode_video2_size = %d , gotPicCount = %d", decode_video2_size, gotPicCount);

LOGI("pAvFrame->linesize %d %d %d", pAvFrame->linesize[0], pAvFrame->linesize[1],

pCodecCtx->height);

if (gotPicCount != 0) {

count++;

sws_scale(

pImgConvertCtx,

(const uint8_t *const *) pAvFrame->data,

pAvFrame->linesize,

0,

pCodecCtx->height,

pFrameNv21->data,

pFrameNv21->linesize);

//获取数据大小 宽高等数据

int dataSize = pCodecCtx->height * (pAvFrame->linesize[0] + pAvFrame->linesize[1]);

LOGI("pAvFrame->linesize %d %d %d %d", pAvFrame->linesize[0],

pAvFrame->linesize[1], pCodecCtx->height, dataSize);

jbyteArray data = env->NewByteArray(dataSize);

env->SetByteArrayRegion(data, 0, dataSize,

reinterpret_cast<const jbyte *>(v_out_buffer));

// onFrameAvailable 回调

jclass clazz = env->GetObjectClass(frameCallback);

jmethodID onFrameAvailableId = env->GetMethodID(clazz, "onFrameAvailable", "([B)V");

env->CallVoidMethod(frameCallback, onFrameAvailableId, data);

env->DeleteLocalRef(clazz);

env->DeleteLocalRef(data);

}

}

av_packet_unref(pPacket);

}

}

2.Java层数据回调

在上面的jni操作完成后,我们已经获得了解析完成的裸数据,接下来只要将裸数据传到java层,我们也就算是大功告成了,这里我们用回调来实现。

//Rtmp回调

public interface PlayCallback {

//数据准备回调

void onPrepared(int width, int height);

//数据回调

void onFrameAvailable(byte[] data);

//播放结束回调

void onPlayFinished();

}

接着我们只需要将这个回调传入native,再通过jni将解析好的数据传给java即可。

RtmpPlayer.getInstance().nativeSetCallback(new PlayCallback() {

@Override

public void onPrepared(int width, int height) {

//start 循环调运会阻塞主线程 需要在子线程里运行

RtmpPlayer.getInstance().nativeStart();

}

@Override

public void onFrameAvailable(byte[] data) {

//获得裸数据,裸数据的格式为NV21

Log.i(TAG, "onFrameAvailable: " + Arrays.hashCode(data));

surfaceView.refreshFrameNV21(data);

}

@Override

public void onPlayFinished() {

//播放结束的回调

}

});

//数据准备

int code = RtmpPlayer.getInstance().prepare("rtmp://58.200.131.2:1935/livetv/hunantv");

if (code == -1) {

//code为-1则证明rtmp的prepare有问题

Toast.makeText(MainActivity.this, "prepare Error", Toast.LENGTH_LONG).show();

}

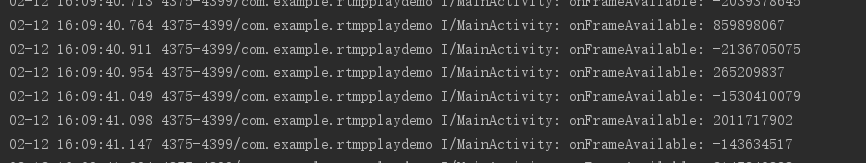

onFrameAvailable得到的data就是我们需要的NV21格式的数据了,下图是我播放湖南卫视得到的数据回调,从hashCode上来看,每次的数据回调都不一样,可以认为数据是实时刷新的。

3.Java层与JNI的交互

新建了RtmpPlayer单例类做为Jni与java层交互的通道。

public class RtmpPlayer {

private static volatile RtmpPlayer mInstance;

private static final int PREPARE_ERROR = -1;

private RtmpPlayer() {

}

//双重锁定防止多线程操作导致的创建多个实例

public static RtmpPlayer getInstance() {

if (mInstance == null) {

synchronized (RtmpPlayer.class) {

if (mInstance == null) {

mInstance = new RtmpPlayer();

}

}

}

return mInstance;

}

//数据准备操作

public int prepare(String url) {

if(nativePrepare(url) == PREPARE_ERROR){

Log.i("rtmpPlayer", "PREPARE_ERROR ");

}

return nativePrepare(url);

}

//加载库

static {

System.loadLibrary("rtmpplayer-lib");

}

//数据准备

private native int nativePrepare(String url);

//开始播放

public native void nativeStart();

//设置回调

public native void nativeSetCallback(PlayCallback playCallback);

//停止播放

public native void nativeStop();

}

四、小结

至此为止我们已经获得NV21的裸数据,由于时间有限,文章需要写的内容比较多,因此需要分为上下两篇进行讲述。在下篇内我会讲述如何通过OpenGL将我们获得的NV21数据绘制上去,以及如何通过NV21裸数据进行人脸识别,并绘制人脸框。这也是为何我们费尽心机想要得到NV21裸数据的原因。上篇的ffmpeg接入如有问题,可以在下篇最后的附录查看我上传的demo,参考demo可能上手的更容易些。